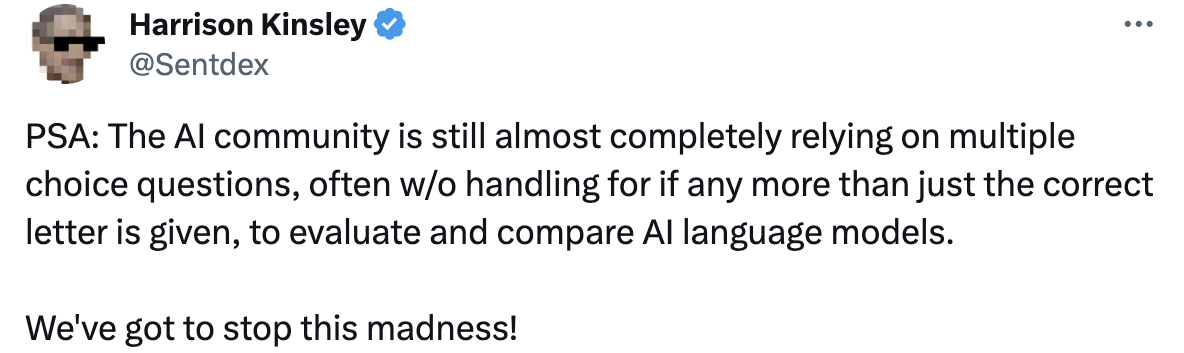

The 🤗 Open LLM Leaderboard is a widely used benchmark for comparing LLMs. Despite its popularity, there have been questions about the validity and usefulness of the benchmark for evaluating real-world performance, with some even saying "the land of open benchmarks is pointless and measures almost nothing useful".

In this report, we used Zeno to dive into the data and explore what the benchmark actually measures. What tasks does it test? What does the data look like?

We find that it is indeed hard to gauge the real-world usability of LLMs from the results of the leaderboard, as the tasks it includes are disconnected from how LLMs are used in practice. Furthermore, we find clear ways the leaderboard can be gamed, such as by exploiting the common structure of ground truth labels. In sum, we hope that this report demonstrates the importance of testing your model in a disaggregated way on on data that is representative of the downstream use-cases you care about.